DESCRIPTION

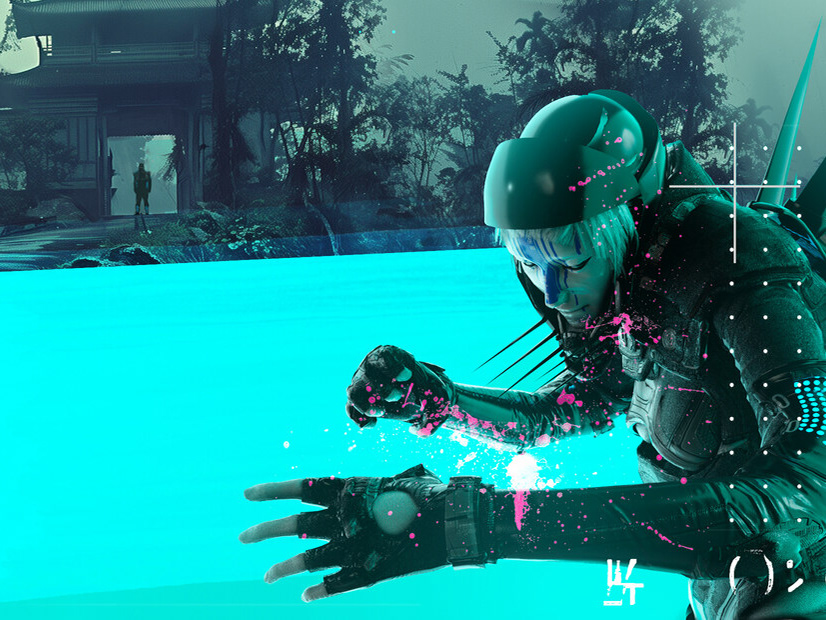

Wiggle Room was an interactive Unity installation developed for Belfast’s 2024 cultural celebrations. The experience translated users’ real-world movement into responsive on-screen interactions, allowing visitors to control virtual elements and engage with digital objects in a virtual space. The installation ran at the MAC (Metropolitan Arts Centre) from 19 September 2024 into early 2025.

ROLE

I was the Main Unity Developer responsible for translating design proofs into a complete interactive experience, combining gameplay/interaction programming with real-time tracking hardware integration.

KEY CONTRIBUTIONS

- Developed user tracking logic that detects entry/exit and manages transitions into active interaction.

- Implemented object behaviours & NPC appearances so that the environment responded dynamically to user movement and activity.

- Built the interaction manager that coordinates these systems — handling state, events, and behaviour flow in response to tracking data.

- Integrated and calibrated ZED 2i body-tracking hardware in Unity to run consistently in a live public setting.

- Supported the multi-machine setup so tracking computation and Unity visuals stayed synchronized during exhibition hours.

CHALLENGES

Partway through the project, we switched from Orbbec cameras to ZED 2i hardware. I had to configure the new machines and rework the tracking integration and interaction behaviours on the new setup, which was a good test of adapting under pressure while keeping things stable for the live installation.

TECHNICAL

- Unity (C#): Interaction systems, NPC/object behaviour, and tracking logic

- ZED 2i: Body-tracking hardware integration and calibration

- Data synchronization: Ensured the body tracking data fed into Unity consistently and without interruption throughout exhibition hours.

VIDEO